The term artificial intelligence shows up everywhere now. It is said to change the way you do business. But, a lot of what people sell as artificial intelligence today is not real. This is a strong technology, but many do not understand it. Artificial intelligence cannot think on its own. It cannot reason or come up with new ideas.

If you are a business leader, you should look past all the excitement. You need to know how this computer science really works. This guide will break down the real technology behind artificial intelligence. The focus will be on large language models, or language models. It will help you see what ai technology really is, and what it is not.

Defining Artificial Intelligence in Everyday Language

Artificial intelligence is mostly about computer science. It tries to build machines that can do tasks people usually do with their minds. This means things like problem-solving, understanding words, and making choices. The big idea is to reach something called artificial general intelligence someday. That would mean a machine could do anything a person can do with their mind. Right now, this is still just an idea.

Today’s ai systems are not alive. They don’t have feelings or know they exist. They are made for certain jobs and do them well—most of the time. It is important that you know the difference between these AI tools and real human intelligence. Knowing this helps you use artificial intelligence in your business in the right way. We will talk more about what artificial intelligence is, main features of it, and how it’s different from regular computer software.

What Does “AI” Really Mean?

When you hear the words artificial intelligence, you may think of a machine that can think on its own. But we’re still not quite there yet. The AI you use each day is really a smart copycat. It does not think for itself. It just does a great job at spotting patterns.

So, what is artificial intelligence in simple words? It is when computer systems do things that typically require a natural thinking process. Most AI systems, like large language models, work by looking at big sets of data and guessing what the next word in a sentence is. This may make it look like the machine is showing human intelligence, yet the process is all about math. This point matters a lot for any business person. The definition of AI today is not about making a mind that acts like us. It’s about using this strong tool for specific tasks. You need to know you’re getting pattern matching from an algorithm, not creativity. This helps you get the most out of artificial intelligence and be clear about what it can do and cannot do. Do not be fooled by all the buzz about general intelligence and focus on what works with computer systems and language models.

Key Features and Characteristics of AI

AI systems are good at working with data in a way that is like how people think and learn. They can spot complex patterns in big data. Many normal software cannot do this well. Because of this, AI systems can guess or sort things out even if you do not tell them every step to do.

AI systems do not stay the same over time. They learn and change as they get more information. As they keep doing this, they get better and better. This process is why people use ai systems when they want the work to match a changing business world. Do you want to know how these systems work?

These systems have parts that help them do things most other systems cannot do. The main features are:

- Learning from Data: ai systems can take in big data and find links and complex patterns inside.

- Adaptability: ai systems change how they act as they get new information or feedback. This helps them be right more often.

- Automation: They do work, from easy jobs to hard ones, and most times people do not need to help.

How AI Differs From Traditional Software

Traditional software uses a set of fixed rules. A developer writes clear code to tell the program what to do in each case. If something new comes in and it is not written in the code, the software will not be able to handle it. This can make the system fail or give an error. Its way of working is fixed until someone changes it.

AI systems work in a different way. Instead of getting detailed directions, these systems learn from data. In computer science, this is what makes artificial intelligence special and sets it apart from older systems. The rules and patterns come from the data, so AI systems can deal with new things. They do not need to be programmed for every outcome.

The ability to learn is the big difference here. While traditional software stays the same, artificial intelligence can change over time. Machine learning is the process that lets AI systems improve and make choices using experience. It is the main way artificial intelligence gets better and makes predictions instead of just following set orders.

The Core Principles and Components Behind AI Systems

To really get how ai systems work, you have to know about three main things: data, algorithms, and models. These parts all work together. First, the ai systems learn. Then, they use what they learn to make guesses or choices. Machine learning is at the heart of this, and deep learning is a big part of machine learning.

From computer science, we get ideas that let ai systems do more than basic step-by-step commands. Now, ai systems can act in ways that seem like real thinking. In the next parts, you will see how data helps with training, what kinds of models ai systems use, and how ai systems get better with time.

Data, Algorithms, and Training

The way artificial intelligence works starts and ends with data. To get an artificial intelligence system going, you give it large amounts of data. This training data helps the AI learn. The AI looks at the data to find patterns, links, and how things are built. How good and how much data you use will decide how well the new model works later.

Algorithms are instructions that tell the artificial intelligence what to do with the information. They help the system learn. These rules look for links in the training data. For example, if you feed it enough reviews, the algorithm may learn which words people use when they are happy.

There are basic parts of every artificial intelligence system, and you can’t pull them apart.

- Training Data: The fuel that keeps the AI system running. It can be in the form of writing, pictures, or any other kind of information.

- Algorithms: These are the directions that show the AI model how to use the data.

- Training Process: This is the key step, where you keep on giving data to the AI so that the algorithms can learn better each time. This makes the artificial intelligence more accurate over time.

Model Architectures Explained

A model architecture is like the plan for how ai systems work. It shows the parts of an ai system and how they fit together. This plan helps in the way information moves and is used in the system. One kind of model is called the neural network, and it is shaped like the human brain.

The neural network is made up of layers. Each layer has nodes known as “neurons.” The first layer takes in information and passes it to the next. Some networks have only a few layers, but deep neural networks have hundreds. This setup helps ai systems to see simple things at first, and then notice things that are more complicated as it learns more.

There are different types of ai, and the way each works often depends on its model architecture. For example, convolutional neural networks are good at image recognition. Other models, such as transformers, are made for reading and working with data in order, like human language. Picking the right architecture helps the ai system do its job well.

Learning and Adaptation: How AI Improves Over Time

AI does more than just do tasks. It learns from what comes in. How does artificial intelligence get better at things? It can update how it works by using new data, feedback, and different ways to learn.

There is one important way called reinforcement learning. Here, the model tries out things and learns, step by step. It gets a reward when it is right and hears when it is wrong. So, it keeps changing what it does to get more rewards next time. This is often used in gaming AI and robots because they need to find the best way to do stuff.

There are also other ways, like unsupervised learning, where the model finds patterns in data, even when there are no example answers to help it out. There is supervised learning, too, where it learns from sets of questions and answers. These ways of machine learning let AI keep improving on the inside. The model gets more accurate as time goes on, all while not needing full reprogramming.

Modern AI: Large Language Models (LLMs) Unveiled

The big jump in AI skills is because of large language models. These models are a special type of generative AI. They help computers understand and write human-like text. ChatGPT is an example of this. It is not a new type of general intelligence. It is just a smart use of this kind of technology.

Large language models are why AI is so useful now. They use deep learning and natural language processing to make new content. To really know how AI works today, you need to learn about language models, how they work, and what they cannot do.

What Is a Large Language Model (LLM)?

A Large Language Model (LLM) is a type of AI that works with natural language. You can think of it as a very smart tool that finds patterns in the words you use every day. It has been trained on a huge amount of text from the internet, books, and other places. This training helps it learn how words, phrases, and sentences fit together.

When people talk about artificial intelligence and machine learning, there is a key difference. LLMs come from machine learning. They do not start with set grammar rules or a list of what words mean. Instead, they figure out which words are likely to go together by looking at a lot of examples. This lets the LLM make sentences that sound clear and natural, just like something a person might write.

Even though LLMs seem smart, they do not really understand what they read or write. These models work by guessing the next word in a sentence using patterns they have seen before during training. So, when they help answer questions, write messages, or give a summary, they are just picking out the best word order for the task. They do not actually know what any of it means.

How Do LLMs Process and Generate Text?

AI-powered applications like ChatGPT work in a few main steps. When you give the app a prompt, the large language model first changes your words into numbers. This step is called tokenization. Each token can be one word or just part of a word. These tokens then go into the model’s neural network.

In the neural network, the real power of generative ai shows up. The model looks at how people use language, using what it has learned from billions of points. It figures out which token, or piece of a word, should come next in your text. It does not always go with just one likely option. Instead, it checks several ways to keep the responses in natural language and to make them feel more human.

The model does this step for every token. It adds one token to your sentence, then uses this new line to help guess what comes next. The large language models repeat this again and again until your answer is complete. Because of this way of working, these powerful language models can handle many types of natural language processing. They can do things like translate language or create new content.

Limitations: Memory, Creativity, and Thought in LLMs

Large language models can do many things, but they do not “think” like people do. Their smart answers come from spotting patterns in data. The intelligence you see is not real thinking. It is just a trick of finding patterns in training data. These models do not have real creativity, feelings, or the skill to make up new and original ideas. When you see something that looks creative from them, it is really just new ways of putting together things they learned before.

Also, language models have limited memory. They hold onto details in one chat, called the context window, but this memory does not last. After you stop talking or go past the window, the model forgets what you said. They do not keep learning from every talk or remember you over time.

The most important things you need to know are:

- No True Understanding: These models use symbols based on probability. They do not know what the words mean.

- Limited Memory: They forget your past chats. They cannot remember what you did before.

- Lack of Originality: They are good at mixing ideas they already know, but they do not truly invent or create new things.

How Does an LLM Actually Work? Step-by-Step

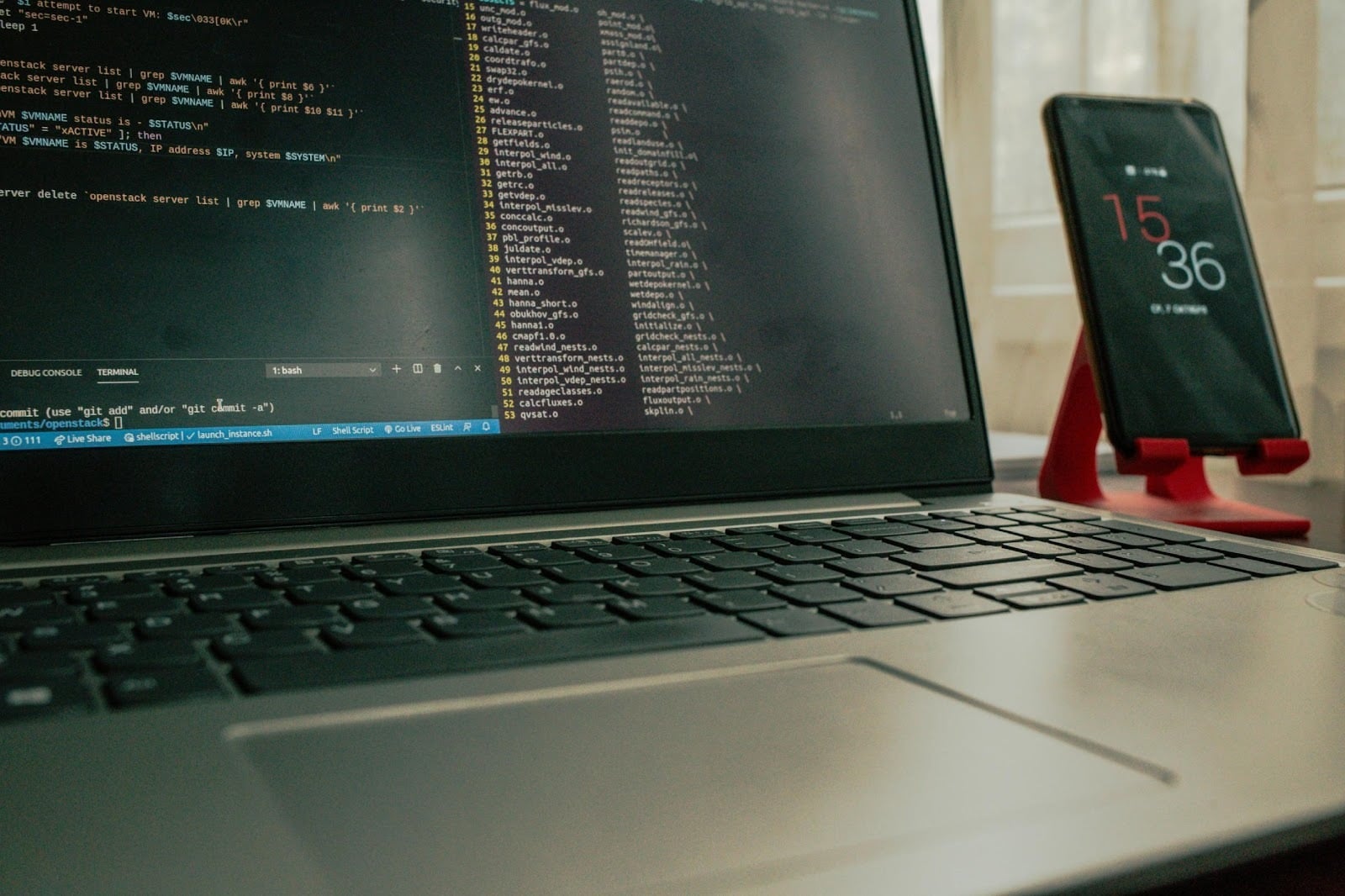

Let’s take a closer look at how an LLM works. How does artificial intelligence create text each time you send a message? There are two main steps. First, the system goes through a big training phase. This part takes a lot of time and uses a huge amount of data. Next, when you use the system, there is a fast step called the inference phase. In this step, the ai systems quickly put together a reply based on what you asked.

Both steps use deep learning to turn all the information from training into clear answers. If you understand this easy process, then you can see how artificial intelligence makes words that sound like they come from a person. This shows it is all about math and programs working together. There is no thinking or feeling involved. Let’s look at how these ai systems are made and how they use what they learn to answer your questions.

Training an LLM: Data Input and Model Building

Training an LLM is a huge job. It starts when you collect a big training data set. This can be terabytes or petabytes of text. The text comes from the internet, digital books, and many other places. The model uses this large amount of human language as the raw information to learn from. The main idea is to show the model every pattern of words and facts people use.

To build the model, the data goes into a deep neural network. Most often, it is a transformer model. The model learns by trying to guess the next word in a line or fill in missing words. It checks its guesses against the real words in the data set. Then, it changes its settings inside to make future guesses better.

The model does this over and over, billions of times. This step slowly sets the values of its billions of inner parts. This needs a lot of special computers (GPUs) and a lot of time, such as weeks or even months. After all this work, you get a model that knows many patterns in human language.

Inference: How Models Generate Responses

Inference is the stage where a trained LLM starts working. When you give it a prompt, the model does not look for an answer in a set list. Instead, it uses its learned settings to make a reply from scratch. This is at the heart of generative AI, as it makes new content that was not there before.

So, how do AI-powered apps like ChatGPT work right now? The model takes what you write. It puts that in its neural network and starts to guess what words to say next, one by one, until it has a good answer for you. At every step, it picks the word that seems to fit best by working out probabilities. It then builds the whole reply word by word.

Because of this generative process, you can get different replies even if you ask the same thing more than once. The model may go in a new direction, but it is still likely to give you a response that makes sense. You end up getting new content, made right then and there, based on what the model has learned during training.

Why “Thinking” Isn’t Part of LLMs’ Abilities

Many people think artificial intelligence can think, but this is not true. This idea comes from science fiction movies and stories. In real life, large language models work using math and statistics. They do not have real thoughts or feelings like the human brain. These language models do not have beliefs or know what they are doing. There is no awareness in them.

When a large language model writes a reply, it is not thinking. It is using its training data to guess what words come next. It looks at your prompt and picks the next words based on what it learned before. The model is a tool for text prediction, not a mind with ideas. It does not truly know that Paris is the capital of France. It only sees that people often write “the capital of France is Paris” together.

This is why these language models cannot think like people. There is no inside world or real knowledge in them. There is no real understanding of the words you give them. When you know how they work, you can use them to do language jobs quickly. They are not like the human brain, but they are great at finding and using patterns in text.

A Brief History of AI and the Birth of LLMs

The journey to modern AI did not start with the internet or chatbots. The idea of a thinking machine goes back a long time. But real progress in AI began in the middle of the 1900s. People started to ask questions about what computers could do. The first AI systems followed set rules and could only do simple things. They were very different from the game-changing language models we have now.

Over the years, there were important moments, such as with IBM’s Deep Blue. Then came new work on neural networks. AI has changed so much over time. This history shows how we went from using strict rules to using flexible, data-driven learning. That shift made it possible to create the language models and other smart systems we now use.

Early Milestones in Artificial Intelligence

The story of artificial intelligence started in the 1950s. Alan Turing, who helped create computer science, asked, “Can machines think?” He came up with the “Turing Test.” This test checks if a machine can act just like a person. This idea made people think about how machines could try to be smart.

In 1956, the term “artificial intelligence” was used for the first time at a Dartmouth College meeting. Many people working in computer science came to this event. They created the first real AI program called the Logic Theorist. These people were hopeful, but there were big problems with computers at that time. Computers were slow, and it was hard for the programs to do much.

To explain what artificial intelligence is from this point in history, you can say it began with people trying to write programs that solve simple, logical problems. Big moments, like IBM’s Deep Blue beating a world chess champion in 1997, showed how AI could be good at very clear tasks. This event made people excited to set bigger goals for artificial intelligence.

From Rule-Based Systems to Neural Networks

The first ai applications used rule-based systems. People would make a list of “if-then” rules and give them to the computer. For example, a medical system might say, “IF the patient has a fever and a cough, THEN suspect the flu.” These systems worked well for specific tasks, but they were not flexible. The computer could not handle things that were not part of the list.

The big change came when neural network technology started to grow again. These models worked by using ideas from the function of the human brain. They did not need someone to program every rule. Instead, they could learn rules from data that was given to them. This was a shift from logic-based ai systems to learning-based ai systems. In the 1980s, the backpropagation algorithm was created. This new way to teach the networks allowed them to get better at tasks by training on data.

This move was important for ai applications. It meant we did not need humans to think of every single rule for the computer. Deep learning uses large amounts of data to find patterns and connections. This is what makes the types of ai today different from earlier, rule-based tools. Deep learning, inspired by the human brain and neural networks, is able to learn using large amounts of data where the old ai systems were limited.

The Emergence and Growth of Transformer Models

The biggest step forward in recent AI history came in 2017 with the invention of the transformer model architecture. In deep learning, before transformers, it was hard to process language and other sequential data. Models could not keep track of context when handling sentences or long passages. This made natural language tasks tough for them.

Transformer models changed things with a feature called “attention.” This means the model knows which words in the text matter the most at any time. The model can use and understand context even if the words are far away from each other. With this, AI got much better at dealing with whole documents.

This breakthrough made it possible to build the large language models we use now, such as GPT and Claude. The transformer model is so good that it is now the base for almost all top generative AI and generative AI tools. As research in deep learning and language models moves forward, we see this design driving most progress in generative ai and LLM technology.

Pioneer Inventors and The Evolution of LLM Technology

Modern language models did not come from one person. The work is the result of years of research from many people and groups. Early on, some thinkers started to imagine smart machines. Later, more people built on these ideas. Engineers made the transformer architecture, and that was a big step forward. Every age added its own part, and important discoveries were made along the way.

The way AI development changed was due to some key ideas. These ideas moved the focus away from old methods to ways that use more data. Today, language models do things that were not possible before. Now, let’s see who helped make this shift, and which new things changed how we think about AI.

Key Breakthroughs: Who Changed the Game?

There have been a few big moments in computer science that helped bring AI systems out of theory and into real use. On this journey, there were some pioneers who brought ideas that we still hear about today. They gave us the base that others used for more ai development down the line.

Modern AI is not something that one person alone invented. Still, some people made changes that were big for the types of ai we see now. These visionaries wanted to do more with machines and showed that machines could go from doing simple logic to learning in new ways.

Key people and moments for main types of ai systems are:

- Alan Turing: In the 1950s, he gave us the “Turing Test.” He asked if machines could think. This set the way for talking about AI.

- Frank Rosenblatt: In 1967, he built the very first neural network called the Mark 1 Perceptron. He showed that a machine could learn by trying, making mistakes, and then trying again.

- Google Researchers: In 2017, their paper “Attention Is All You Need” gave the world the transformer architecture. This is now used in most modern neural network and LLMs.

The Shift From Classic AI to Language Models

The rise of AI marks a big change in how people think about it. Classic AI focused on logic, knowledge, and reason. People tried building systems that would “think” by using symbols and rules. It was like solving a puzzle step by step. This way worked in some simple areas, but could not handle the real world’s mix of things.

Today’s language models show a new way of looking at AI. Instead of making rules for everything, the focus shifted to machine learning. The idea is clear. If a model sees enough examples, it can find patterns by itself. There is no need to teach it all the rules in detail.

This new path needed two things: huge sets of data, which mostly come from the internet, and strong computers, especially GPUs. Both of these came together, allowing the building and training of very big neural networks. Now, these powerful language models lead in AI. They do not use reason like old AI systems did. They predict what comes next by looking at data.

Major Organizations Leading LLM Innovation

The development of cutting-edge AI technology is now dominated by a handful of major organizations with the resources to fund massive research and development efforts. These companies and research labs are responsible for creating the foundational models that power most of the LLM platforms you see today. Which organizations are leading AI and LLM advancements?

These leaders invest billions in compute power and talent to train and refine ever-larger and more capable models. Their breakthroughs set the pace for the entire industry, with smaller companies often building their applications on top of these core platforms.

Here are some of the major organizations at the forefront of LLM innovation:

|

Organization |

Key Contribution / Platform |

Focus Area |

|---|---|---|

|

OpenAI |

GPT series (e.g., ChatGPT) |

Creating powerful, general-purpose generative AI models. |

|

|

Gemini, LaMDA, BERT |

Integrating AI into search and enterprise products. |

|

Meta |

Llama series |

Advancing open-source models to foster wider development. |

|

Anthropic |

Claude series |

Focusing on AI safety and creating helpful, harmless systems. |

Comparing Top LLM Platforms: ChatGPT, Claude, Perplexity & More

There are a lot of AI tools available now. Because of this, many people can feel confused about which one to pick. You should know that most of these tools use just a few big large language models. If you learn how ChatGPT, Claude, and Perplexity are different from one another, you can better pick the one that fits what you need.

Each of these language models has the same basic tech inside, but there are small changes in how they work. Some are better for creative writing, some focus more on giving correct facts, and others are good at having clear talks with people. Let’s look at what makes each of these large language models special.

What Makes ChatGPT Unique?

ChatGPT is made by OpenAI, and is now well-known because it can talk with people in a way that feels natural. The main thing that makes it good is how flexible it is with natural language. It helps with many things like making emails, writing code, sharing ideas, and showing complex topics in a simple way.

What makes ChatGPT special is how much training it gets. This helps it stay on track in conversations and be great at doing what the user wants. It is good at creative jobs and when you need help making a first draft. The reason AI tools like ChatGPT work so well is because of their huge training and because of reinforcement learning with feedback from people. This helps their answers match what users mean.

But sometimes this generative AI can focus on being creative, and that may hurt how accurate it is. As it works to make text that sounds right, it might sometimes “hallucinate” and make up facts or sources. It is best to use ChatGPT for creative tasks and natural language writing, not for checking facts.

Claude and Its Approach to Natural Language

Claude is made by Anthropic and is designed with safety in mind. Its main goal is to give useful, honest, and safe answers. This makes Claude different from the rest in the world of LLMs. It can talk well with people, and its creators worked hard so it will not be likely to say anything that is biased or harmful.

One thing that helps Claude stand out is a large context window. This feature lets it handle much more text at once than many other models. Because of this, tasks like summarizing long reports and looking at legal documents are easier to do. It can answer with helpful details from a lot of information and keep track of how human language is used even over many thousands of words.

Anthropic trains Claude’s natural language skills using a method called “Constitutional AI.” They teach the model to stay true to some key rules, like a constitution, so there will not need to be people watching over it all the time. That makes it a good choice for business needs that want safety and steady results.

DeepSeek and Perplexity: Alternative LLMs

Beyond the well-known options, there are more large language models coming up that can do special things. Perplexity AI, for one, has made a name for itself as an “answer engine.” How do AI-powered apps such as ChatGPT work in a different way from Perplexity? While ChatGPT gives you text by using what it has learned before, Perplexity mixes its own language model with a real-time web search. This lets it give you answers you can talk with, plus it will show you where the facts come from by adding citations and links. Because of this, it is a very good tool if you want to check facts or do research.

DeepSeek is another family of large language models that do very well in coding and math. These language models are built for those areas and are usually open-source. That means anyone can use them or add new things to them without much trouble. DeepSeek is great for developers and people who want to do more technical work.

These other models show us that the large language models world is not just one thing. No matter if you want a helper for your research like Perplexity, or if you need help with programming through DeepSeek, there are models being made and improved for all kinds of important uses, not just regular conversation.

Real Use Cases Powering Third-Party Tools

The biggest impact of major large language models (LLMs) is not only in how you use them directly. They act as the “engine” behind thousands of other tools made by different companies. Most new artificial intelligence (AI) applications you see today do not create their own models from zero. Instead, they use APIs to connect and get the power of models like GPT-4 or Claude.

This setup has made new ways of working grow fast. Startups and big companies are building special AI applications based on these root models. For example, a company in marketing can use an LLM for an AI copywriter. A legal tech business might use it to sum up laws for their clients. These tools let people use artificial intelligence with an easy-to-use design for a clear job.

Some every day, real-world examples of artificial intelligence you can see through these third-party tools are:

- Customer Service: AI chatbots that use strong LLMs to talk with people and fix customer problems.

- Content Creation: Tools that make social media posts, write blog articles, and create ad copy for you.

- Sales Automation: Tools that write personalized sales emails for sales teams.

These new use of ai tools help people get work done fast in many places, from customer service to content creation on social media. This way, both big and new companies can make the most of artificial intelligence in their own way.

Differences Between AI, Machine Learning, and Deep Learning

The terms artificial intelligence, machine learning, and deep learning often get used like they mean the same thing. But they are not all the same. Artificial intelligence, or AI, is the big field where people try to make machines smart. Machine learning is a subset of AI, and it is about making systems that can get better over time by looking at data, even if no one tells them exactly what to do.

Deep learning goes a step deeper. It is a subset of machine learning. Deep learning uses deep neural networks, which are networks with many layers, to work with vast amounts of data. Knowing this order helps you see how AI systems really work and what each part does.

What is Machine Learning—And Where Is It Used?

Machine learning is the main tool behind most modern artificial intelligence. Machine learning is a subset of AI, so it helps computers learn from data. Over time, they get better at a task without anyone needing to write code for every step. With machine learning, you give an algorithm the data. It uses the data to find patterns so it can solve problems.

There is a difference between artificial intelligence and machine learning. Artificial intelligence is about creating smart tools or machines. Machine learning is a way to reach that goal. Most of today’s AI would not work without machine learning. It plays a big role in many things we use each day. For example, machine learning helps run recommendation systems on streaming apps. It is also used in spam filters to keep bad emails out of your inbox.

In business, machine learning is key for data analytics. Businesses use it in predictive modeling to guess future sales. In fraud detection, it looks at payments to spot anything out of place. In personalized marketing, it helps make sure each person sees the right offer. So, if a job needs to spot patterns in data, machine learning is often the best choice.

Deep Learning Versus Classic ML

Deep learning is a special kind of machine learning. It takes learning from data a step farther. With classic machine learning, a person often needs to pick and pull out the main features from the data before using it. This step is called feature engineering. It can take a lot of time, and it can keep the model from reaching its best.

Deep learning does this work for you. It uses something called an artificial neural network with many layers. That is why it is called “deep.” This system can find the most important things in the raw data on its own. It lets deep learning find and work with complex patterns. It also does well with things like images and text, which are unstructured data.

The key differences are:

- Data Scale: Deep learning works best with a huge amount of data, while classic machine learning can work with less.

- Feature Engineering: Classic machine learning needs people to pick the important features. Deep learning does this step on its own.

- Complexity: Deep learning uses more complex models and needs more computer power than classic machine learning.

How These Concepts Relate to LLMs

Large Language Models, or LLMs, come straight from deep learning. These models use a special deep learning technique and a neural network called the transformer model. This helps them understand and create human language in ways that are very close to how people do it. If deep learning had not grown as much as it has, these large language models would not be the way they are now.

When you look at AI-powered tools like ChatGPT, you see how they work in this area. They start with the idea of artificial intelligence. The way they do this is by using machine learning. Deep learning is the exact method being used here. An LLM is a deep learning model that has been trained on a huge amount of text.

So, an LLM is not something separate from artificial intelligence, machine learning, or deep learning. It is a perfect example of how these ideas all go together. It shows a subset of machine learning that is now very good, flexible, and also sets what most people think of when talking about artificial intelligence.

Evaluating AI Tools: Choosing the Right Software for Your Needs

As a business owner, using AI is not just about following the latest trend. It should be about finding the right tools that help you fix real problems. There are so many AI applications out there. You need a clear way to look at what is worth your time and money. You want to pick software that brings real value, helps with efficiency, and gives you a good return on what you spend.

Don’t let marketing language mislead you. Stick to checking how things work for your business and its needs. This section will help you choose the AI technology that fits, show you how to see the value in it, and talk about what risks might come with it.

Key Selection Criteria for Business Owners

How can you tell if your business should use an LLM or another AI tool? To start, try not to get caught up in all the buzz. Think about the main problem you need to solve. Are you looking to make customer support faster, make marketing copy easier, or take a closer look at sales data? The tool you need will depend on the job you have to do.

Once you know what you need, start looking at different ai applications using a few main areas to help you choose. Don’t pay attention only to cool features. See how the tool will work with what you already use for your day-to-day jobs. Make sure that your team will get how to use it, too.

For business owners, these points matter most:

- Task-Relevance: Is the tool built for the thing you want it to do or improve?

- Accuracy and Reliability: Can you trust what it gives you? If it’s for research, will it tell you where the info comes from? If it is for making things, is the output always steady?

- Ease of Use: Will it be easy for your team to get the hang of it, or will they be stuck for a long time?

- Integration: Will the tool work with the software you already have up and running?

Identifying Value, Efficiency, and Accuracy

The real value of an AI tool comes from how much it helps you. You need to look at it in a few ways. Does it save you money by doing jobs on its own? Does it help you make more money by doing better marketing? Or does it save you time by doing the same things over and over again, so you do not have to? Before you decide to use a tool, try to figure out how much it could help your business. For example, if an AI chatbot deals with 30% of customer questions, how much money do you save in labor?

It is also important that the AI tool helps you work better and quicker. A good tool should make your work easier, not harder. You can run a test first, or try a pilot, to see how it works with your real setup. Does the tool really help you finish work faster like it says?

Accuracy really matters too, especially if you are working with data or talking to customers. When you are in the process of ai development or you are choosing an AI tool, check very carefully for any mistakes, bias, or wrong facts in what the tool does. If the tool is quick but often wrong, it can create more issues than what it solves.

Assessing Cost Risks and ROI

Using AI is not only about the money you need to pay each month for subscriptions. There are other things you must think about that cost you too. These can be the price to train the people on your team, the time it takes to set up the AI in your work tasks, and what you might lose if the AI gets things wrong. For instance, if your AI tool is unfair when hiring new people, this can hurt your company’s name and even bring legal trouble.

You need to look at all these costs and compare them to what the AI tool does for you. If you want to know if using a language model or a different AI tool is good for your business, begin with something small and not risky. Pick a clear way AI can help, like letting it write down notes from meetings or creating first drafts for your social media posts.

Pay close attention to what happens when you use the tool. Watch how much time your team saves, how much more work you get done, or if the quality of work gets better. Getting this information helps you make a strong case for using more AI across your business and helps you figure out real ROI. That way, you can see that investing in this technology really gives your business something useful.

Practical Applications of LLMs and AI in Everyday Business

Beyond all the talk, what are some ways people use artificial intelligence today? For businesses, the biggest help from AI and LLMs is in saving time, cutting costs, and making customer service better. These tools do not take over work from people. Instead, they help people do their jobs better.

AI applications can do boring tasks for you. They can also give deep results when you look at your business data. This new technology is changing key jobs everywhere. Now, let’s look at some of the best use cases for artificial intelligence in business.

Automating Repetitive Tasks Effortlessly

One of the biggest benefits of using ai systems is that they can take care of the small, routine work that takes up a lot of employee time. Think about things like writing out what happened in meetings, making short summaries of long email threads, or sorting out feedback from customers. AI systems can do all these jobs very fast.

For example, you can have an ai system listen to a one-hour meeting, and have it give you a short summary and a list of things to do in less than a minute. Some tools can go through hundreds of customer support messages and put them into topics, sending each to the right team without any person handling them.

By letting ai systems do these jobs, your team gets time back to do the work that needs more thinking, planning, or creativity. Using this kind of help does more than make things go faster. It helps make people feel better about their jobs because it takes away boring work and lets them do projects that mean more.

Enhancing Customer Experience and Communication

AI is changing the way businesses talk with their customers. Virtual assistants and chatbots powered by LLM can now deal with many customer questions in a smart way that was not possible before. They can understand natural language, look up order details, and give instant help any time of day.

This fast help makes the customer experience much better. People do not have to wait on hold for a person. They can get answers to common questions right away. At the same time, your human support team will have more time to work on hard and important problems that need a personal approach. This means everyone gets help faster.

AI can do even more than just give support. It can make messages feel special to each person. For example, AI can help you write emails for certain customers, suggest products they might like, and even change your website to match what each visitor likes. This personal touch helps people feel understood and keeps them loyal to your business.

Improving Decision-Making and Insights

Artificial intelligence helps you make better choices. It works as a decision support tool by looking through vast amounts of data very fast. It finds things that people may not see. These days, businesses have more data than ever before, but a lot of it gets ignored. AI lets you find what is useful in all that data.

For example, a data analytics platform that uses artificial intelligence can sort through years of sales data. It can show you new trends in the market, tell you what people might buy soon, or help you spot the best ways to reach your customers. When you have these answers, your choices are guided by facts, not just guesses.

The power of artificial intelligence and decision support goes further, helping every part of the business. It can help you fix supply chains by telling you when shipping will be slow. It can look at what workers are saying to you and show how to make the job better. In all these ways, AI takes in huge amounts of data and gives you real and useful answers. You get a better chance to stay ahead of others.

Challenges, Risks, and Ethical Considerations of LLM Adoption

While there are many benefits to artificial intelligence, using it can also bring some big risks and problems. Are there any challenges or risks with artificial intelligence? Yes, there are. You have to think about data privacy, security, bias in algorithms, and making sure it is used in the right way. If you ignore these risks, you might face money loss, legal trouble, or harm your reputation.

When you add artificial intelligence to your business, you need to be careful and responsible. Good checks and rules for ai systems are not just nice to have—they are needed. This helps prevent harm and helps people trust your ai systems.

Data Privacy and Security Risks

When you use artificial intelligence tools, you often send your data to a third-party server. This can bring data privacy and security risks. If you put customer details, business plans, or employee information into a public AI, you might be making that data available to others who should not see it. Someone could misuse the information or it could even get stolen.

Many providers of ai technology will use the data you give them to help train their ai models. It’s important to read the rules and terms to know what will happen to your data, how it will be stored, and how it will be protected. For groups working with private information, they may need to use private ai or on-site services that give more safety and protection for their data.

Are there risks or problems with artificial intelligence? Yes, and security is a big one. The ai models can be attacked by hackers. Some people may try to change the training data and make the ai give wrong answers. Others might try to break into the system. So, having strong security is very important.

Potential for Bias and Errors

AI models are only as fair as the data they get trained on. Most large language models learn from huge amounts of text on the internet that has not been filtered. Because of this, they often pick up and repeat the same unfair ideas found in that data set. For example, if you use an AI tool to look at resumes, it can end up picking people from just a few groups if the data it learned from shows hiring mistakes from the past.

These language models can also often get facts wrong. People sometimes call these mistakes “hallucinations.” An AI like this can say something that sounds very true, but it might be wrong or just made up. It can even create a fake source to sound more sure. If people use things written by AI without checking them, it can make wrong information seem true. This can hurt trust in your brand.

Here are the main risks to look out for:

- Algorithmic Bias: The AI can keep or even make worse unfair treatment of people because of things like gender, race, or age.

- Factual Inaccuracy: The model might give information that sounds good to you, but it is all wrong.

- Lack of Context: The AI may miss the full meaning or detail of a situation. This can cause it to give answers that do not fit or that miss the right tone.

Responsible Use and Oversight

Given the risks, it is very important to use artificial intelligence with care. There must always be people checking and making sure AI systems work the way they should. You cannot just set up an AI and walk away, especially when it comes to big tasks in a business. The best way to support ai ethics is to set clear rules for how it should be used at work.

Setting these rules means knowing what you want ai systems to do. Some tasks are fine for AI. Others will need a person to look at the work and decide if it is right. For example, it is okay for artificial intelligence to write a first draft of a legal paper. But a lawyer has to read it and say it is good before anyone uses it. People checking AI work helps catch mistakes and bias.

Are there risks or problems when it comes to artificial intelligence? Yes, but if you use AI with care, these issues are less likely to happen. You need to be honest when your customers deal with your ai systems. Keep checking your AI for bias often. Always make sure there are rules about who is responsible for what your AI does.

Conclusion

To sum up, it is important for business owners and busy workers to understand AI and how large language models work when dealing with today’s digital world. These large language models can do a lot, but they are not perfect. They do not offer real creativity or remember things in the same way people do. The way they work comes from data and set steps.

When you learn about different AI spaces like ChatGPT, Claude, and DeepSeek, you can pick what is right for you and your work needs. Using these tools can help you do tasks with less effort and make better choices. Still, you need to think about things like privacy of your data and the way you use these tools, so be careful. Try to stay informed about changes and be active about using AI so you make the most of it.

If you want advice that fits you, you may reach out and set up a free meeting with our team.

Frequently Asked Questions

How do I know if my business should use an LLM or another AI tool?

Start by looking for a task that takes up a lot of your time or happens often. Pick one thing you do that is repeated a lot or seems bigger than the rest. If this task uses words, like writing, making summaries, or answering questions, then an LLM can work well. When the job uses numbers, making guesses about the future, or working with pictures, then other types of ai or machine learning tools might work better. These tools can help you do those tasks faster and with less effort.

Are there any privacy concerns with using public LLMs like ChatGPT?

Yes, when you use public LLMs like ChatGPT, there can be privacy concerns. The platform may collect your data and use it to train new models. If you share sensitive information when you chat, someone could get to it or use it in a wrong way. It’s very important to know and read the platform’s privacy policy before you use it.

What future developments can we expect in AI and LLM technology?

Future changes in AI and LLM technology will likely bring better ways to understand the context. The systems will get faster and work more efficiently. There will be new and stronger safety rules built in. People may see big steps in how these machines handle many types of data at the same time. These improvements will make it easier for us to work with and talk to machines.